Key figures from across Luxembourg’s datacenter community recently joined SnT and Digital Luxembourg to hear from a panel of international speakers on the technological changes that will be driving the computing needs of tomorrow. Experts agree that technological developments in the near future will require access to reliable cloud services. That’s because the number of advanced use-cases that rely on GPUs has exponentially increased over the past couple of years, and there is no sign of this trend slowing down.

Big Data Technologies/Applications and their requirements

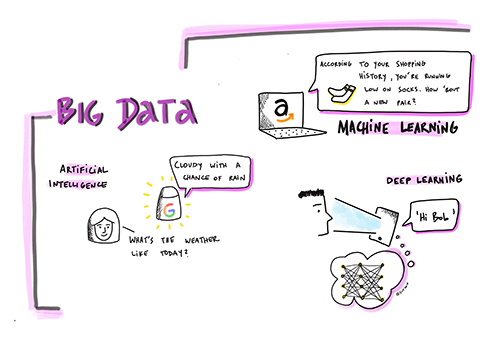

Big Data is a popular buzzword these days, but so are Artificial Intelligence, Machine Learning, and Deep Learning. How do all these fit together?

Big Data: voluminous and complex data sets that traditional data-processing application software can’t cope with. Big Data is often broken into four dimensions – or four Vs: volume, variety, velocity, and veracity. That’s because besides the huge volume and the complexity of the data, the speed and the quality of the data also need to be addressed by current technology in order to produce insights and value.

Artificial Intelligence: intelligent machines that work and react with human-like intelligence. AI covers any computer system that can sense, think and act in an environment towards a goal. Apple’s Siri is an excellent example of AI.

Machine Learning: the study and construction of algorithms that can learn from and make predictions on data. Amazon uses Machine Learning to tailor shopping recommendations according to your previous purchases or activity.

Deep Learning: Machine Learning methods that excel thanks to their ability to extract data representations on their own, rather than relying on human guidance to understand data. Self-driving cars or advanced facial recognition devices use this technology.

These technologies require reliable and fast computing power. But traditional CPUs can only handle a few tasks at a time. GPUs, however, with their thousands of cores, make it possible to create networks with millions of connections, emulating the human brain. “In these networks, lots of nodes perform simple calculations in parallel, doing the same thing at the same time,” explained Dr. Anand Rao, Global AI Lead at PWC. “This allows them to crunch enormous volumes of data again and again.” The result is software that can learn to recognize patterns in order to perform tasks such as decoding the structure of proteins and DNA, recognizing faces and driving cars.

Rao, Global AI Lead at PWC, explained that with the arrival of GPUs over the last ten years, Deep Learning has given Artificial Intelligence, a 60-year-old concept, new momentum.

Dr. Anand Rao, Global AI Lead at PWC

Meanwhile, Alison B. Lowndes, in charge of Artificial Intelligence Developer Relations for EMEA at NVIDIA, became involved in Deep Learning while developing algorithms for spotting irregularities in cells that evaded even the most experienced radiographers. In her talk, she emphasised the extent to which different circumstances had aligned to make Deep Learning a scalable reality. In particular, reaching the limits of Moore’s Law had forced the ICT community to look beyond CPUs. She recommended that attendees keep an eye open in 2018 for the dozens of companies currently working on creating brand-new custom chips called Tensor Processing Units (TPU), tailored for use for AI.

Expensive Infrastructures

Of course, discussion also focused on the fact that most companies cannot afford to invest in their own CPU and GPU clusters. They require access to reliable cloud services in order to implement Deep Learning and Big Data technologies. Cloud services enable them to rent computing power, increasing capacity as their business grows. The result is that the initial investment required by a tech startup decreases substantially, lowering the barriers for market entry.

Dr. Radu State, researcher at SnT

Luxembourg has a vibrant community of datacenter services and infrastructures. “To ensure technological readiness, it’s vital to enable open conversations about GPU clusters, FPGA, deep learning in the cloud, robotics, data analytics and other concrete use-cases for the computing needs of tomorrow”, says Dr. Radu State, researcher at SnT. With Luxembourg’s expertise, our ecosystem will play a substantial role in next-generation computing!

Stay tuned for future events on datacenters and big data technologies.