Last week we published the news that the first Luxembourgish AI model, LuxemBERT, was created in a partnership with BGL BNP Paribas. Here we go behind the story and get to know the PhD student who worked on the project, Cedric Lothritz, and what the research entails.

Please read the original news story here.

1. What is LuxemBERT?

LuxemBERT is what’s called a language model. Simply put, a language model is a mathematical model that translates written text (something that humans can understand) into vectors/lists of numbers (something that a computer can understand). The general idea is that language models are trained to translate texts with a similar meaning into similar vector representations.

For example:

Rome and Paris are similar in a lot of ways, e.g. both are capital cities, both are in Europe, etc. As such, their vectors are mostly very similar, but still different in some ways, so they can be differentiated. There is a huge number of language models that are specialised to understand other languages and even specific domains, but LuxemBERT is the first model of its kind built for the Luxembourgish language.

2. How were BGL BNP Paribas involved in the project?

BGL BNP Paribas supported the project from the start. We had a continuous discussion through weekly meetings to exchange technical expertise from both teams, review the progress of the model and get ideas to get more usable data. They also helped me scrutinize and challenge the results of the experiments we did to evaluate the performance of LuxemBERT. And now, BGL BNP Paribas is making the model available to the wider public, which will allow it to flourish as it can be used by the Luxembourgish AI community to build various applications.

3. How did you build LuxemBERT?

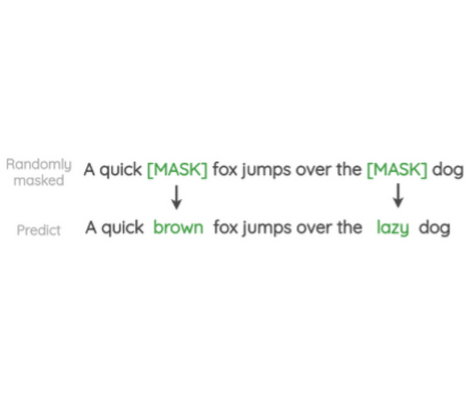

BERT models are already commonly known in the NLP community. The main learning algorithm is known as “Masked Language Modeling”. The general idea to “teach” the model the relations between words is by essentially letting it solve Mad Libs puzzles. For this, the learning algorithm selects a sentence, randomly masks out some words in a first step, and then asks the model to reconstruct the original sentence in a second step.

Here an example:

https://amitness.com/2020/05/self-supervised-learning-nlp/.

The algorithm picked the above sentence, and masked out the words “brown” and “lazy”. The model will then try to replace the masks with the correct words. If it succeeds, the model will adjust itself one way; if it makes a mistake, it will adjust itself in another way.

The main challenge is the amount of text data we need to train the model. BERT models are very hungry for a lot of data which is readily available for widely spoken languages such as English or German, but it’s rather scarce for a language like Luxembourgish. We managed to collect 6 million written sentences in Luxembourgish, and that sounds like a lot, until you consider that the original English BERT model was trained on 130 million sentences. In order to mitigate the lack of authentic data, we applied a technique known as data augmentation which consists of artificially modifying given data in order to create new training data for a machine learning model. For LuxemBERT, we came up with the idea to partially translate unambiguous words from a German dataset to Luxembourgish in a systematic way.

For example:

https://orbilu.uni.lu/handle/10993/51815.

The words in red circles are translated to Luxembourgish (green circles), creating a German/Luxembourgish mix. As German and Luxembourgish mostly share the same grammar, this kind of simplistic translation preserves the proper syntax of the sentence. We can use this new sentence as a new data sample for our language model. Using this technique, we artificially created 6 million additional sentences to train LuxemBERT.

4. Why did you focus on this research area during your PhD?

First and foremost, I am fascinated by how machines can extract proper meaning from natural language, an imprecise form of communication full of nuance, exceptions, and ambiguity; and one that is continuously evolving with time. LuxemBERT in particular, was an opportune convergence of my research interests and skills and the research challenge that BGL BNP Paribas had at that time. They were looking to develop more natural language processing tools, in a low-resource context. The main issue of learning on text is the massive amount of data required, most of the time not available for an industry-specific use case. Since SnT and BGL already had a strong partnership, the team quickly offered to kick-off the new project and recruit a natural language processing PhD student. To develop the new techniques required by this industrial topic, we seized the opportunity of creating a model in Luxemburgish: there was none available, so there was a need in the AI community.

5. Why is it important?

As the world becomes more and more automated and reliant on AI to complete tasks, we need to put more research in building reliable and well-performing AI models. In NLP, lots of research to create such models is done for widespread languages such as English, Chinese, Spanish, or German, but not for so-called low-resource languages that are spoken by only a few thousand people. As such, it is important to make an effort to preserve those low-resource and endangered languages. You could say that, as one of relatively few Luxembourgish NLP researchers, it was my duty to build a language model for the Luxembourgish language.

6. It is open-source so what use-cases could there be for it?

Language models can be used and further trained to solve specific NLP problems. Use cases include chatbots, spell checkers, sentiment analysis of customer feedback, classification of emails, automatic translation of texts to another language, and many more.

7. Now that your PhD is coming to an end, what does your future hold?

It is hard to say. I would like to stay in research, as I am still having fun and it allows me to explore a wide variety of new topics while making me feel like I accomplished something and contributed something useful. However, I am also open for opportunities outside of academia.